Dell Infohub

Vultr Docs

Hardware Requirements for Running LLaMA and LLaMA-2 Locally

LLaMA and LLaMA-2 Model Variations

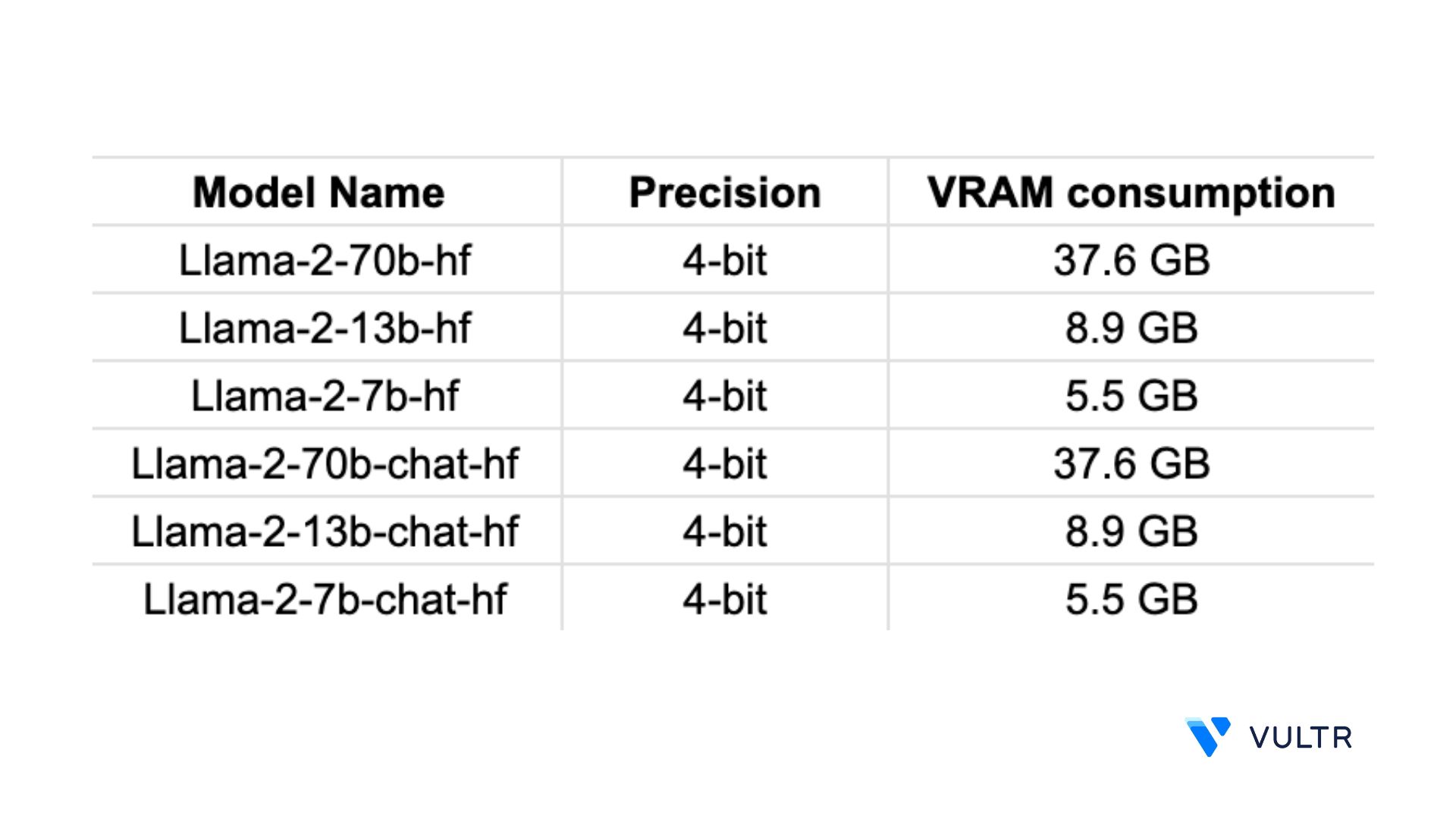

LLaMA and LLaMA-2 models come in various variations with specific file formats: * **GGML (Google Multimodal Language Model)** * **GGUF (Google Unrestricted Full Text)** * **GPTQ (Generative Pre-trained Transformer Quantized)** * **HF (Hugging Face)**

LLaMA-2-13b-chatggmlv3q8_0bin Model

This specific LLaMA-2 model contains 4343 layers offloaded to GPU.

Hardware Requirements

The hardware requirements for running LLaMA and LLaMA-2 locally depend on the specific model variation and size. Here is a list of general hardware requirements: * **CPU:** Intel Core i5-11600K or AMD Ryzen 5 5600X * **GPU:** NVIDIA GeForce RTX 3090 or AMD Radeon RX 6800XT * **RAM:** 64GB or more * **Storage:** SSD with at least 1TB of free space

Ftype 10 mostly Q2_K llama_model_load_internal

This error message is related to the Ftype 10 library and the llama_model_load_internal function. It indicates an issue with loading the LLaMA model internally.

Unlocking the Power of LLaMA

LLaMA is an open source, free-for-research-and-commercial-use large language model. By understanding the hardware requirements and file formats, researchers and developers can unlock the power of these models, fostering innovation in various fields.

Comments